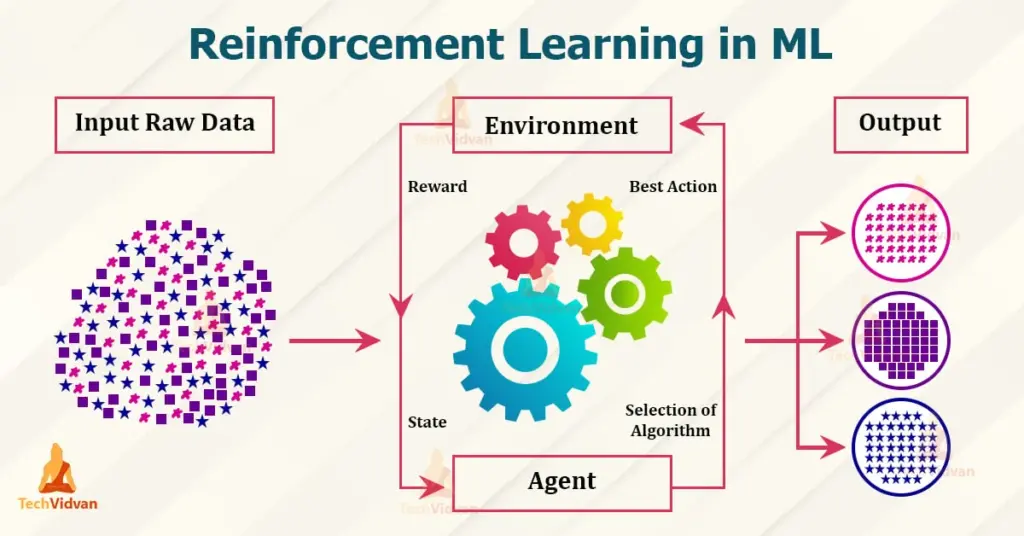

Reinforcement Learning (RL) is a type of machine learning where an agent learns to make decisions by interacting with an environment. The goal is to maximize cumulative rewards over time by choosing the best actions based on trial and error. Unlike supervised learning, RL doesn’t rely on labeled data but instead learns from feedback in the form of rewards or penalties.

Key Concepts in Reinforcement Learning:

- Agent:

The decision-maker in the RL system. It interacts with the environment to learn optimal behaviors. - Environment:

The external system with which the agent interacts. It provides feedback based on the agent’s actions. - State (

S):

A representation of the current situation or condition of the environment. - Action (

A):

The set of all possible moves the agent can make in a given state. - Reward (

R):

A scalar value the agent receives after taking an action. It indicates the immediate benefit of that action. - Policy (

π):

A strategy or mapping from states to actions that defines the agent’s behavior. - Value Function (

V):

Estimates the expected cumulative reward from a given state, assuming the agent follows a particular policy. - Q-Value (Action-Value) Function (

Q):

Estimates the expected cumulative reward from a given state-action pair. - Exploration vs. Exploitation:

- Exploration: Trying new actions to discover their effects.

- Exploitation: Choosing actions based on past experience to maximize rewards.

Types of Reinforcement Learning:

- Model-Free RL:

- The agent learns directly from interactions with the environment without any knowledge of the underlying model.

- Examples: Q-learning, SARSA.

- Model-Based RL:

- The agent builds a model of the environment and uses it to make decisions.

- Examples: Dyna-Q.

Popular Algorithms:

- Q-Learning (Off-Policy):

- A model-free algorithm that learns the value of an action in a state and updates its knowledge using the Bellman equation: Q(s,a)←Q(s,a)+α[R+γmaxa′Q(s′,a′)−Q(s,a)]Q(s, a) \leftarrow Q(s, a) + \alpha [R + \gamma \max_{a’} Q(s’, a’) – Q(s, a)]Q(s,a)←Q(s,a)+α[R+γa′maxQ(s′,a′)−Q(s,a)]

- Where:

- α\alphaα is the learning rate.

- γ\gammaγ is the discount factor.

- SARSA (On-Policy):

- Similar to Q-learning but updates the Q-value based on the action actually taken: Q(s,a)←Q(s,a)+α[R+γQ(s′,a′)−Q(s,a)]Q(s, a) \leftarrow Q(s, a) + \alpha [R + \gamma Q(s’, a’) – Q(s, a)]Q(s,a)←Q(s,a)+α[R+γQ(s′,a′)−Q(s,a)]

- Deep Q-Networks (DQN):

- Combines Q-learning with deep neural networks to handle high-dimensional state spaces (e.g., images).

- Policy Gradient Methods:

- Directly optimize the policy by adjusting parameters using gradient ascent: θ←θ+α∇θJ(θ)\theta \leftarrow \theta + \alpha \nabla_\theta J(\theta)θ←θ+α∇θJ(θ)

- Actor-Critic Methods:

- Combines policy gradients (actor) with value function estimation (critic).

Applications of Reinforcement Learning:

- Game Playing:

- RL is used in games like chess, Go, and video games. AlphaGo and AlphaZero are famous examples.

- Robotics:

- Robots learn to perform tasks like walking, grasping objects, or navigating through complex environments.

- Autonomous Vehicles:

- RL helps in decision-making for self-driving cars, such as lane changes and obstacle avoidance.

- Finance:

- RL is used in portfolio optimization, trading strategies, and risk management.

- Healthcare:

- RL can optimize treatment strategies and personalize patient care.

- Recommendation Systems:

- Adaptive systems learn to recommend content or products based on user interactions.

- Energy Management:

- RL optimizes energy consumption in smart grids and data centers.